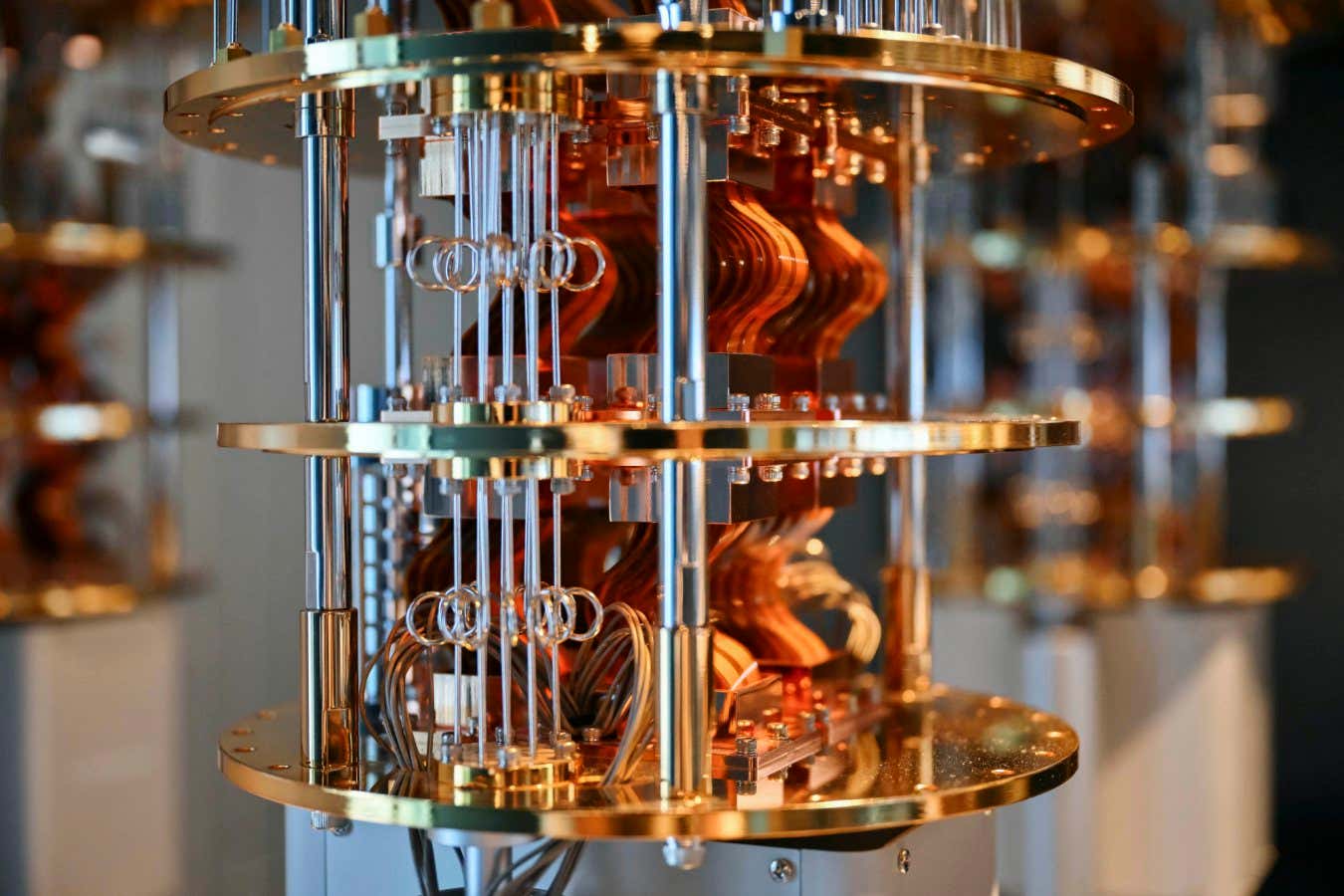

Parts of an IBM quantum computer on display

ANGELA WEISS/AFP via Getty Images

A quantum computer and conventional supercomputer that work together could become an invaluable tool for understanding chemicals. A collaboration between IBM and the Japanese scientific institute RIKEN has now established one path to getting there.

Predicting what a molecule will do within a reaction – for instance, as part of a medical treatment or an industrial catalyst – often hinges on understanding its electrons’ quantum states. Quantum computers could accelerate the process of computing these states, but in their current form, they are still prone to errors. Conventional supercomputers can catch those mistakes before they become a problem.

In a joint statement to New Scientist, Seiji Yunoki and Mitsuhisa Sato at RIKEN said quantum computers can push traditional computers to new capabilities. Now they and their colleagues have used IBM’s Heron quantum computer and RIKEN’s Fugaku supercomputer to model molecular nitrogen, as well as two different molecules made from iron and sulphur.

The researchers used up to 77 quantum bits, or qubits, and an algorithm called SQD to divide the computation of molecules’ quantum states between the machines. The quantum computer made calculations while the supercomputer checked for and corrected errors. For instance, if Heron produced a mathematical function describing more electrons than contained in the molecule at hand, Fugaku would discard that part of the solution and have Heron update and repeat the calculation.

This hybrid method doesn’t yet surpass the best-case scenario of what a supercomputer could do alone, but it is competitive with some standard approaches, says Jay Gambetta at IBM, who was not involved with the experiment. “It’s 1751640587 just about comparing computational tools.”

In the near term, this intervention is the “secret sauce” for getting error-prone quantum computers to do chemistry, says Kenneth Merz at the Cleveland Clinic in Ohio. Using a different IBM quantum computer yoked to a classical computer, his team developed a variation of the SQD algorithm that can model molecules in solutions, which is a more realistic representation of chemical experiments than previous models.

In Merz’s view, further optimisations of SQD could help the combination of quantum and conventional computing gain tangible advantages over just the latter within the next year.

“The combination of quantum and supercomputing is not only worthwhile – it’s inevitable,” says Sam Stanwyck at computing firm NVIDIA. A realistic use of quantum computing is one where quantum processors are integrated with powerful classical processors in a supercomputer centre, he says. NVIDIA has already developed a software platform that aims to support such hybrid approaches.

Aseem Datar at Microsoft says his firm has its sights set on the “tremendous potential in the combination of quantum computing, supercomputing and AI to accelerate and transform chemistry and material science” as well.

But while quantum computing industry stakeholders champion the idea, many challenges remain. Markus Reiher at ETH Zurich in Switzerland says the results from the RIKEN experiment are encouraging, but it is not yet clear whether this approach will become the preferred way to conduct quantum chemistry computations. For one thing, the accuracy of the quantum-supercomputer pair’s final answer remains uncertain. For another, there are already well-established conventional methods for performing such computations – and they work very well.

The promise of incorporating a quantum computer into the computation process is that it could help model bigger molecules or work more quickly. But Reiher says that scaling up the new approach may be difficult.

Gambetta says a new version of IBM’s Heron quantum computer was installed at RIKEN in June – and it already makes fewer errors than past models. He anticipates even bigger hardware improvements in the near future.

The researchers are also tweaking the SQD algorithm and optimising the way Heron and Fugaku work in parallel to make the process more efficient. Merz says the situation is similar to where conventional supercomputers were in the 1980s: there is no shortage of open problems, but incorporating new technology could deliver big returns.

Topics: