Modern enterprises are rich in data that spans multiple modalities—from text documents and PDFs to presentation slides, images, audio recordings, and more. Imagine asking an AI assistant about your company’s quarterly earnings call: the assistant should not only read the transcript but also “see” the charts in the presentation slides and “hear” the CEO’s remarks. Gartner predicts that by 2027, 40% of generative AI solutions will be multimodal (text, image, audio, video), up from only 1% in 2023. This shift underlines how vital multimodal understanding is becoming for business applications. Achieving this requires a multimodal generative AI assistant—one that can understand and combine text, visuals, and other data types. It also requires an agentic architecture so the AI assistant can actively retrieve information, plan tasks, and make decisions on tool calling, rather than just responding passively to prompts.

In this post, we explore a solution that does exactly that—using Amazon Nova Pro, a multimodal large language model (LLM) from AWS, as the central orchestrator, along with powerful new Amazon Bedrock features like Amazon Bedrock Data Automation for processing multimodal data. We demonstrate how agentic workflow patterns such as Retrieval Augmented Generation (RAG), multi-tool orchestration, and conditional routing with LangGraph enable end-to-end solutions that artificial intelligence and machine learning (AI/ML) developers and enterprise architects can adopt and extend. We walk through an example of a financial management AI assistant that can provide quantitative research and grounded financial advice by analyzing both the earnings call (audio) and the presentation slides (images), along with relevant financial data feeds. We also highlight how you can apply this pattern in industries like finance, healthcare, and manufacturing.

Overview of the agentic workflow

The core of the agentic pattern consists of the following stages:

- Reason – The agent (often an LLM) examines the user’s request and the current context or state. It decides what the next step should be—whether that’s providing a direct answer or invoking a tool or sub-task to get more information.

- Act – The agent executes that step. This could mean calling a tool or function, such as a search query, a database lookup, or a document analysis using Amazon Bedrock Data Automation.

- Observe – The agent observes the result of the action. For instance, it reads the retrieved text or data that came back from the tool.

- Loop – With new information in hand, the agent reasons again, deciding if the task is complete or if another step is needed. This loop continues until the agent determines it can produce a final answer for the user.

This iterative decision-making enables the agent to handle complex requests that are impossible to fulfill with a single prompt. However, implementing agentic systems can be challenging. They introduce more complexity in the control flow, and naive agents can be inefficient (making too many tool calls or looping unnecessarily) or hard to manage as they scale. This is where structured frameworks like LangGraph come in. LangGraph makes it possible to define a directed graph (or state machine) of potential actions with well-defined nodes (actions like “Report Writer” or “Query Knowledge Base”) and edges (allowable transitions). Although the agent’s internal reasoning still decides which path to take, LangGraph makes sure the process remains manageable and transparent. This controlled flexibility means the assistant has enough autonomy to handle diverse tasks while making sure the overall workflow is stable and predictable.

Solution overview

This solution is a financial management AI assistant designed to help analysts query portfolios, analyze companies, and generate reports. At its core is Amazon Nova, an LLM that acts as an intelligent LLM for inference. Amazon Nova processes text, images, or documents (like earnings call slides), and dynamically decides which tools to use to fulfill requests. Amazon Nova is optimized for enterprise tasks and supports function calling, so the model can plan actions and call tools in a structured way. With a large context window (up to 300,000 tokens in Amazon Nova Lite and Amazon Nova Pro), it can manage long documents or conversation history when reasoning.

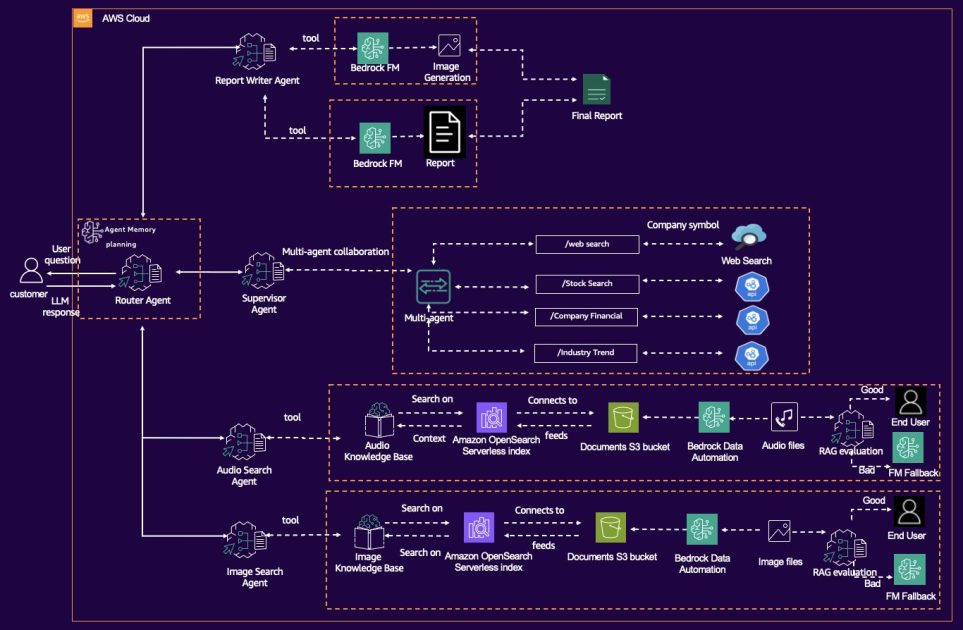

The workflow consists of the following key components:

- Knowledge base retrieval – Both the earnings call audio file and PowerPoint file are processed by Amazon Bedrock Data Automation, a managed service that extracts text, transcribes audio and video, and prepares data for analysis. If the user uploads a PowerPoint file, the system converts each slide into an image (PNG) for efficient search and analysis, a technique inspired by generative AI applications like Manus. Amazon Bedrock Data Automation is effectively a multimodal AI pipeline out of the box. In our architecture, Amazon Bedrock Data Automation acts as a bridge between raw data and the agentic workflow. Then Amazon Bedrock Knowledge Bases converts these chunks extracted from Amazon Bedrock Data Automation into vector embeddings using Amazon Titan Text Embeddings V2, and stores these vectors in an Amazon OpenSearch Serverless database.

- Router agent – When a user asks a question—for example, “Summarize the key risks in this Q3 earnings report”—Amazon Nova first determines whether the task requires retrieving data, processing a file, or generating a response. It maintains memory of the dialogue, interprets the user’s request, and plans which actions to take to fulfill it. The “Memory & Planning” module in the solution diagram indicates that the router agent can use conversation history and chain-of-thought (CoT) prompting to determine next steps. Crucially, the router agent determines if the query can be answered with internal company data or if it requires external information and tools.

- Multimodal RAG agent – For queries related with audio and video information, Amazon Bedrock Data Automation uses a unified API call to extract insights from such multimedia data, and stores the extracted insights in Amazon Bedrock Knowledge Bases. Amazon Nova uses Amazon Bedrock Knowledge Bases to retrieve factual answers using semantic search. This makes sure responses are grounded in real data, minimizing hallucination. If Amazon Nova generates an answer, a secondary hallucination check cross-references the response against trusted sources to catch unsupported claims.

- Hallucination check (quality gate) – To further verify reliability, the workflow can include a postprocessing step using a different foundation model (FM) outside of the Amazon Nova family, such as Anthropic’s Claude, Mistral, or Meta’s Llama, to grade the answer’s faithfulness. For example, after Amazon Nova generates a response, a hallucination detector model or function can compare the answer against the retrieved sources or known facts. If a potential hallucination is detected (the answer isn’t supported by the reference data), the agent can choose to do additional retrieval, adjust the answer, or escalate to a human.

- Multi-tool collaboration – This multi-tool collaboration allows the AI to not only find information but also take actions before formulating a final answer. This introduces multi-tool options. The supervisor agent might spawn or coordinate multiple tool-specific agents (for example, a web search agent to do a general web search, a stock search agent to get market data, or other specialized agents for company financial metrics or industry news). Each agent performs a focused task (one might call an API or perform a query on the internet) and returns findings to the supervisor agent. Amazon Nova Pro features a strong reasoning ability that allows the supervisor agent to merge these findings. This multi-agent approach follows the principle of dividing complex tasks among specialist agents, improving efficiency and reliability for complex queries.

- Report creation agent – Another notable aspect in the architecture is the use of Amazon Nova Canvas for output generation. Amazon Nova Canvas is a specialized image-generation model in the Amazon Nova family, but in this context, we use the concept of a “canvas” more figuratively to mean a structured template or format generated content output. For instance, we could define a template for an “investor report” that the assistant fills out: Section 1: Key Highlights (bullet points), Section 2: Financial Summary (table of figures), Section 3: Notable Quotes, and so on. The agent can guide Amazon Nova to populate such a template by providing it with a system prompt containing the desired format (this is similar to few-shot prompting, where the layout is given). The result is that the assistant not only answers ad-hoc questions, but can also produce comprehensive generated reports that look as if a human analyst prepared them, combining text, image, and references to visuals.

These components are orchestrated in an agentic workflow. Instead of a fixed script, the solution uses a dynamic decision graph (implemented with the open source LangGraph library in the notebook solution) to route between steps. The result is an assistant that feels less like a chatbot and more like a collaborative analyst—one that can parse an earnings call audio recording, critique a slide deck, or draft an investor memo with minimal human intervention.

The following diagram shows the high-level architecture of the agentic AI workflow. Amazon Nova orchestrates various tools—including Bedrock Amazon Data Automation for document and image processing and a knowledge base for retrieval—to fulfill complex user requests. For brevity, we don’t list all the code here; the GitHub repo includes a full working example. Developers can run that to see the agent in action and extend it with their own data.

Example of the multi-tool collaboration workflow

To demonstrate the multi-tool collaboration agent workflow, we explore an example of how a question-answer interaction might flow through our deployed system for multi-tool collaboration:

- User prompt – In the chat UI, the end-user asks a question, such as “What is XXX’s stock performance this year, and how does it compare to its rideshare‑industry peers?”

- Agent initial response – The agent (Amazon Nova FM orchestrator) receives the question and responds with:

- Planning and tool selection – The agent determines that it needs the following:

- The ticker symbol for the company (XXX)

- Real‑time stock price and YTD changes

- Key financial metrics (revenue, net income, price-earnings ratio)

- Industry benchmarks (peer YTD performance, average revenue growth)

- Planning execution using tool calls – The agent calls tools to perform the following actions:

- Look up ticker symbol:

- Fetch real‑time stock performance using the retrieved ticker symbol:

- Retrieve company financial metrics using the retrieved ticker symbol:

- Gather industry benchmark data using the retrieved ticker symbol:

- Validation loop – The agent runs a validation loop:

If anything is missing or a tool encountered an error, the FM orchestrator triggers the error handler (up to three retries), then resumes the plan at the failed step.

- Synthesis and final answer – The agent uses Amazon Nova Pro to synthesize the data points and generate final answers based on these data points.

The following figure shows a flow diagram of this multi-tool collaboration agent.

Benefits of using Amazon Bedrock for scalable generative AI agent workflows

This solution is built on Amazon Bedrock because AWS provides an integrated ecosystem for building such sophisticated solutions at scale:

- Amazon Bedrock delivers top-tier FMs like Amazon Nova, with managed infrastructure—no need for provisioning GPU servers or handling scaling complexities.

- Amazon Bedrock Data Automation offers an out-of-the-box solution to process documents, images, audio, and video into actionable data. Amazon Bedrock Data Automation can convert presentation slides to images, convert audio to text, perform OCR, and generate textual summaries or captions that are then indexed in an Amazon Bedrock knowledge bases.

- Amazon Bedrock Knowledge Bases can store embeddings from unstructured data and support retrieval operations using similarity search.

- In addition to LangGraph (as shown in this solution), you can also use Amazon Bedrock Agents to develop agentic workflows. Amazon Bedrock Agents simplifies the configuration of tool flows and action groups, so you can declaratively manage your agentic workflows.

- Applications developed by open source frameworks like LangGraph (an extension of LangChain) can also run and scale with AWS infrastructure such as Amazon Elastic Compute Cloud (Amazon EC2) or Amazon SageMaker instances, so you can define directed graphs for agent orchestration, making it effortless to manage multi-step reasoning and tool chaining.

You don’t need to assemble a dozen disparate systems; AWS provides an integrated network for generative AI workflows.

Considerations and customizations

The architecture demonstrates exceptional flexibility through its modular design principles. At its core, the system uses Amazon Nova FMs, which can be selected based on task complexity. Amazon Nova Micro handles straightforward tasks like classification with minimal latency. Amazon Nova Lite manages moderately complex operations with balanced performance, and Amazon Nova Pro excels at sophisticated tasks requiring advanced reasoning or generating comprehensive responses.

The modular nature of the solution (Amazon Nova, tools, knowledge base, and Amazon Bedrock Data Automation) means each piece can be swapped or adjusted without overhauling the whole system. Solution architects can use this reference architecture as a foundation, implementing customizations as needed. You can seamlessly integrate new capabilities through AWS Lambda functions for specialized operations, and the LangGraph orchestration enables dynamic model selection and sophisticated routing logic. This architectural approach makes sure the system can evolve organically while maintaining operational efficiency and cost-effectiveness.

Bringing it to production requires thoughtful design, but AWS offers scalability, security, and reliability. For instance, you can secure the knowledge base content with encryption and access control, integrate the agent with AWS Identity and Access Management (IAM) to make sure it only performs allowed actions (for example, if an agent can access sensitive financial data, verify it checks user permissions ), and monitor the costs (you can track Amazon Bedrock pricing and tools usage; you might use Provisioned Throughput for consistent high-volume usage). Additionally, with AWS, you can scale from an experiment in a notebook to a full production deployment when you’re ready, using the same building blocks (integrated with proper AWS infrastructure like Amazon API Gateway or Lambda, if deploying as a service).

Vertical industries that can benefit from this solution

The architecture we described is quite general. Let’s briefly look at how this multimodal agentic workflow can drive value in different industries:

- Financial services – In the financial sector, the solution integrates multimedia RAG to unify earnings call transcripts, presentation slides (converted to searchable images), and real-time market feeds into a single analytical framework. Multi-agent collaboration enables Amazon Nova to orchestrate tools like Amazon Bedrock Data Automation for slide text extraction, semantic search for regulatory filings, and live data APIs for trend detection. This allows the system to generate actionable insights—such as identifying portfolio risks or recommending sector rebalancing—while automating content creation for investor reports or trade approvals (with human oversight). By mimicking an analyst’s ability to cross-reference data types, the AI assistant transforms fragmented inputs into cohesive strategies.

- Healthcare – Healthcare workflows use multimedia RAG to process clinical notes, lab PDFs, and X-rays, grounding responses in peer-reviewed literature and patient audio interview. Multi-agent collaboration excels in scenarios like triage: Amazon Nova interprets symptom descriptions, Amazon Bedrock Data Automation extracts text from scanned documents, and integrated APIs check for drug interactions, all while validating outputs against trusted sources. Content creation ranges from succinct patient summaries (“Severe pneumonia, treated with levofloxacin”) to evidence-based answers for complex queries, such as summarizing diabetes guidelines. The architecture’s strict hallucination checks and source citations support reliability, which is critical for maintaining trust in medical decision-making.

- Manufacturing – Industrial teams use multimedia RAG to index equipment manuals, sensor logs, worker audio conversation, and schematic diagrams, enabling rapid troubleshooting. Multi-agent collaboration allows Amazon Nova to correlate sensor anomalies with manual excerpts, and Amazon Bedrock Data Automation highlights faulty parts in technical drawings. The system generates repair guides (for example, “Replace valve Part 4 in schematic”) or contextualizes historical maintenance data, bridging the gap between veteran expertise and new technicians. By unifying text, images, and time series data into actionable content, the assistant reduces downtime and preserves institutional knowledge—proving that even in hardware-centric fields, AI-driven insights can drive efficiency.

These examples highlight a common pattern: the synergy of data automation, powerful multimodal models, and agentic orchestration leads to solutions that closely mimic a human expert’s assistance. The financial AI assistant cross-checks figures and explanations like an analyst would, the clinical AI assistant correlates images and notes like a diligent doctor, and the industrial AI assistant recalls diagrams and logs like a veteran engineer. All of this is made possible by the underlying architecture we’ve built.

Conclusion

The era of siloed AI models that only handle one type of input is drawing to a close. As we’ve discussed, combining multimodal AI with an agentic workflow unlocks a new level of capability for enterprise applications. In this post, we demonstrated how to construct such a workflow using AWS services: we used Amazon Nova as the core AI orchestrator with its multimodal, agent-friendly capabilities, Amazon Bedrock Data Automation to automate the ingestion and indexing of complex data (documents, slides, audio) into Amazon Bedrock Knowledge Bases, and the concept of an agentic workflow graph for reasoning and condition (using LangChain or LangGraph) to orchestrate multi-step reasoning and tool usage. The end result is an AI assistant that operates much like a diligent analyst: researching, cross-checking multiple sources, and delivering insights—but at machine speed and scale.The solution demonstrates that building a sophisticated agentic AI system is no longer an academic dream—it’s practical and achievable with today’s AWS technologies. By using Amazon Nova as a powerful multimodal LLM and Amazon Bedrock Data Automation for multimodal data processing, along with frameworks for tool orchestration like LangGraph (or Amazon Bedrock Agents), developers get a head start. Many challenges (like OCR, document parsing, or conversational orchestration) are handled by these managed services or libraries, so you can focus on the business logic and domain-specific needs.

The solution presented in the BDA_nova_agentic sample notebook is a great starting point to experiment with these ideas. We encourage you to try it out, extend it, and tailor it to your organization’s needs. We’re excited to see what you will build—the techniques discussed here represent only a small portion of what’s possible when you combine modalities and intelligent agents.

About the authors

Julia Hu Julia Hu is a Sr. AI/ML Solutions Architect at Amazon Web Services, currently focused on the Amazon Bedrock team. Her core expertise lies in agentic AI, where she explores the capabilities of foundation models and AI agents to drive productivity in Generative AI applications. With a background in Generative AI, Applied Data Science, and IoT architecture, she partners with customers—from startups to large enterprises—to design and deploy impactful AI solutions.

Julia Hu Julia Hu is a Sr. AI/ML Solutions Architect at Amazon Web Services, currently focused on the Amazon Bedrock team. Her core expertise lies in agentic AI, where she explores the capabilities of foundation models and AI agents to drive productivity in Generative AI applications. With a background in Generative AI, Applied Data Science, and IoT architecture, she partners with customers—from startups to large enterprises—to design and deploy impactful AI solutions.

Rui Cardoso is a partner solutions architect at Amazon Web Services (AWS). He is focusing on AI/ML and IoT. He works with AWS Partners and support them in developing solutions in AWS. When not working, he enjoys cycling, hiking and learning new things.

Rui Cardoso is a partner solutions architect at Amazon Web Services (AWS). He is focusing on AI/ML and IoT. He works with AWS Partners and support them in developing solutions in AWS. When not working, he enjoys cycling, hiking and learning new things.

Jessie-Lee Fry is a Product and Go-to Market (GTM) Strategy executive specializing in Generative AI and Machine Learning, with over 15 years of global leadership experience in Strategy, Product, Customer success, Business Development, Business Transformation and Strategic Partnerships. Jessie has defined and delivered a broad range of products and cross-industry go- to-market strategies driving business growth, while maneuvering market complexities and C-Suite customer groups. In her current role, Jessie and her team focus on helping AWS customers adopt Amazon Bedrock at scale enterprise use cases and adoption frameworks, meeting customers where they are in their Generative AI Journey.

Jessie-Lee Fry is a Product and Go-to Market (GTM) Strategy executive specializing in Generative AI and Machine Learning, with over 15 years of global leadership experience in Strategy, Product, Customer success, Business Development, Business Transformation and Strategic Partnerships. Jessie has defined and delivered a broad range of products and cross-industry go- to-market strategies driving business growth, while maneuvering market complexities and C-Suite customer groups. In her current role, Jessie and her team focus on helping AWS customers adopt Amazon Bedrock at scale enterprise use cases and adoption frameworks, meeting customers where they are in their Generative AI Journey.